1.) Mapping Agent(cube) position into states

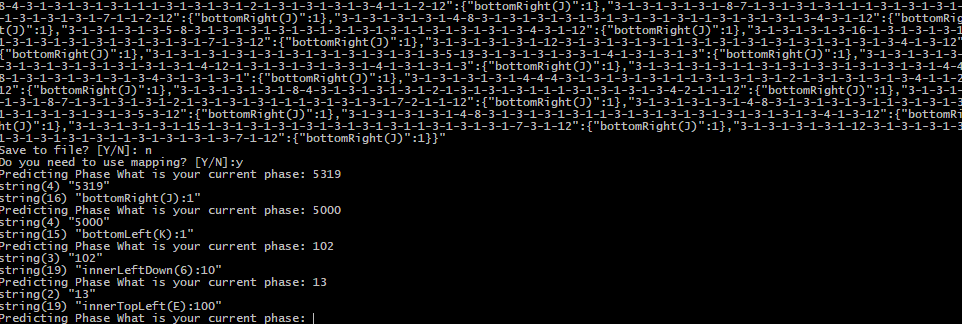

WWWWWWWWWWWWOOOOOOOYOOOYOOOYOOOYGGGGGGGGGGGGGGGGWRRRWRRRWRRRWRRRBBBBBBBBBBBBBBBBRRRRYYYYYYYYYYYY frontCounterClockwise(2) 100 WWWWWWWWWWWWWWWWOOOOOOOOOOOOOOOOGGGGGGGGGGGGGGGGRRRRRRRRRRRRRRRRBBBBBBBBBBBBBBBBYYYYYYYYYYYYYYYY WWWWWWWWWWWWRRRROOOWOOOWOOOWOOOWGGGGGGGGGGGGGGGGYRRRYRRRYRRRYRRRBBBBBBBBBBBBBBBBOOOOYYYYYYYYYYYY frontClockwise(1) 100 WWWWWWWWWWWWWWWWOOOOOOOOOOOOOOOOGGGGGGGGGGGGGGGGRRRRRRRRRRRRRRRRBBBBBBBBBBBBBBBBYYYYYYYYYYYYYYYY RRRRWWWWWWWWWWWWWOOOWOOOWOOOWOOOGGGGGGGGGGGGGGGGRRRYRRRYRRRYRRRYBBBBBBBBBBBBBBBBYYYYYYYYYYYYOOOO backCounterClockwise(4) 100 WWWWWWWWWWWWWWWWOOOOOOOOOOOOOOOOGGGGGGGGGGGGGGGGRRRRRRRRRRRRRRRRBBBBBBBBBBBBBBBBYYYYYYYYYYYYYYYY OOOOWWWWWWWWWWWWYOOOYOOOYOOOYOOOGGGGGGGGGGGGGGGGRRRWRRRWRRRWRRRWBBBBBBBBBBBBBBBBYYYYYYYYYYYYRRRR backClockwise(3) 100 WWWWWWWWWWWWWWWWOOOOOOOOOOOOOOOOGGGGGGGGGGGGGGGGRRRRRRRRRRRRRRRRBBBBBBBBBBBBBBBBYYYYYYYYYYYYYYYY WGWWWGWWWGWWWGWWOOOOOOOOOOOOOOOOGYGGGYGGGYGGGYGGRRRRRRRRRRRRRRRRBBWBBBWBBBWBBBWBYBYYYBYYYBYYYBYY innerLeftDown(6) 100 WWWWWWWWWWWWWWWWOOOOOOOOOOOOOOOOGGGGGGGGGGGGGGGGRRRRRRRRRRRRRRRRBBBBBBBBBBBBBBBBYYYYYYYYYYYYYYYY

2.) Adding random scenarios as inputs (I can't fit the file in here its too big, that's why finding a clever way to summarize states is really important)

Syntax: state action reward next-state

Results (virtually it can solve the problem, you just need a huge amount of storage):

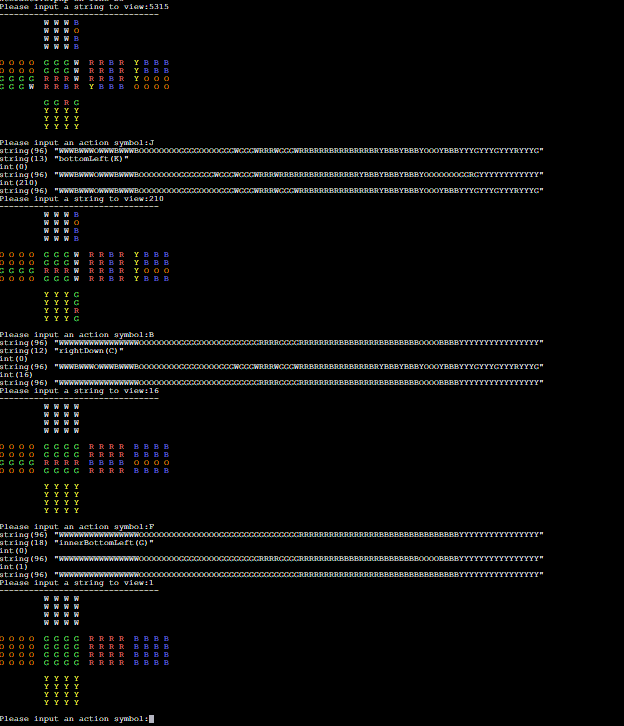

As we observed our agent can solve problems but depends with the data given. next we will apply qlearning in a famous game flappy bird.

Lets use q-learning for flappy bird game